Learning to recognise faces: perceptual narrowing? January 11, 2008

Posted by Johan in Animals, Developmental Psychology, Face Perception, Sensation and Perception.add a comment

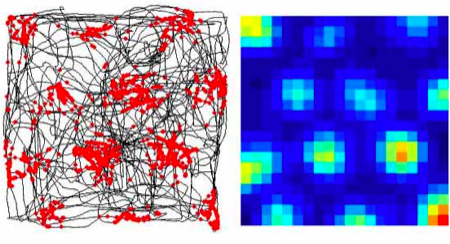

![]() That image certainly piques your interest, doesn’t it? Sugita (2008) was interested in addressing one of the ancient debates in face perception: the role of early experience versus innate mechanisms. In a nutshell, some investigators hold that face perception is a hardwired process, others that every apparently special face perception result can be explained by invoking the massive expertise we all possess with faces, compared to other stimuli. Finally, there is some support for a critical period during infancy, where a lack of face exposure produces irreparable face recognition deficits (see for example Le Grand et al, 2004). Unfortunately, save for a few unfortunate children who are born with cataracts, there is no real way to address this question in humans.

That image certainly piques your interest, doesn’t it? Sugita (2008) was interested in addressing one of the ancient debates in face perception: the role of early experience versus innate mechanisms. In a nutshell, some investigators hold that face perception is a hardwired process, others that every apparently special face perception result can be explained by invoking the massive expertise we all possess with faces, compared to other stimuli. Finally, there is some support for a critical period during infancy, where a lack of face exposure produces irreparable face recognition deficits (see for example Le Grand et al, 2004). Unfortunately, save for a few unfortunate children who are born with cataracts, there is no real way to address this question in humans.

Enter the monkeys, and the masked man. Sugita (2008) isolated monkeys soon after birth, and raised them in a face-free environment for 6, 12 or 24 months. After this, the monkeys were exposed to strictly monkey or human faces for an additional month.

At various points during this time, Sugita (2008) tested the monkeys on two tasks that were originally pioneered in developmental psychology as means of studying pre-lingual infants. In the preferential looking paradigm, two items are presented, and the time spent looking at either item in the pair is recorded. The monkeys viewed human faces, monkey faces, and objects, in various combinations. It is assumed that the monkey (or infant) prefers whichever item it looks at more. In the paired-comparison procedure, the monkey is primed with the presentation of a face, after which it views a face pair, where one of the faces is the same as that viewed before. If the monkey views the novel face more, it is inferred that the monkey has recognised the other face as familiar. So the preferential looking paradigm measures preference between categories, while the paired-comparison procedure measures the ability to discriminate items within a category.

Immediately following deprivation, the monkeys showed equal preference for human and monkey faces. By contrast, a group of control monkeys who had not been deprived of face exposure showed a preference for monkey faces. This finding suggests that at the very least, the orthodox hard-wired face perception account is wrong, since the monkeys should then prefer monkey faces even without previous exposure to them.

In the paired-comparison procedure, the control monkeys could discriminate between monkey faces but not human faces. By contrast, the face-deprived monkeys could discriminate between both human and monkey faces. This suggests the possibility of perceptual narrowing (the Wikipedia article on it that I just linked is probably the worst I’ve read – if you know this stuff, please fix it!), that is, a tendency for infants to lose their ability to discriminate between categories which are not distinguished in their environment. The classic example occurs in speech sounds, where infants can initially discriminate phoneme boundaries (e.g., the difference between /bah/ and /pah/ in English) that aren’t used in their own language, although this ability is lost relatively early on in the absence of exposure to those boundaries (Aslin et al, 1981). But if this is what happens, surely the face-deprived monkeys should lose their ability to discriminate non-exposed faces, after exposure to faces of the other species?

Indeed, this is what Sugita (2008) found. When monkeys were tested after one month of exposure to either monkey or human faces, they now preferred the face type that they had been exposed to over the other face type and non-face objects. Likewise, they could now only discriminate between faces from the category they had been exposed to.

Sugita (2008) didn’t stop there. The monkeys were now placed in a general monkey population for a year, where they had plenty of exposure to both monkey and human faces. Even after a year of this, the results were essentially identical as immediately following the month of face experience. This implies that once the monkeys had been tuned to one face type, that developmental door was shut, and no re-tuning occurred. Note that in this case, one month of exposure to one type trumped one year of exposure to both types, which shows that as far as face recognition goes, what comes first seems to matter more than what you get the most of.

Note a little quirk in Sugita’s (2008) results – although the monkeys were face-deprived for durations ranging from 6 to 24 months, these groups did not differ significantly on any measures. In other words, however the perceptual narrowing system works for faces, it seems to be flexible about when it kicks in – it’s not a strictly maturational process that kicks in at a genetically-specified time. This conflicts quite harshly with the cataract studies I discussed above, where human infants seem to lose face processing ability quite permanently when they miss out on face exposure in their first year. One can’t help but wonder if Sugita’s (2008) results could be replicated with cars, houses, or any other object category instead of faces, although this is veering into the old ‘are faces special’ debate… It’s possible that the perceptual narrowing observed here is a general object recognition process, unlike the (supposedly) special mechanism with which human infants learn to recognise faces particularly well.

On the applied side, Sugita (2008) suggests that his study indicates a mechanism for how the other-race effect occurs – that is, the advantage that most people display in recognising people of their own ethnicity. If you’ve only viewed faces of one ethnicity during infancy (e.g., your family), perhaps this effect has less to do with racism or living in a segregated society, and more to do with perceptual narrowing.

References

Sugita, Y. (2008). Face perception in monkeys reared with no exposure to faces. Proceedings of the National Academy of Sciences (USA), 105, 394-398.